New technology that captures faces and monitors tennis players’ emotions may be in its early stages, but could be a game changer.

The international tennis circuit is a high-stakes, high-emotion affair. Players are often playing for millions of dollars, in front of large audiences.

While poise is highly prized, what happens when they unleash a tantrum? Does a smashed racquet allow them to release their tension and focus on the next point? Does it unnerve their opponent, provide a competitive advantage – or derail their concentration?

In a possible future scenario, a sports commentator might simply reach for a facial recognition technology application to ‘decode’ the emotional state of that player and predict for the viewers what might happen next.

Unlocking the secrets of an athlete’s ‘inner’ mental game is behind a unique collaboration between Monash Business School and Tennis Australia, assessing popular facial recognition technology to map expressions of players during a match.

“The aim of this project is to develop methods to collect accurate information about the facial expressions of elite tennis athletes during match play,” explains Professor Dianne Cook from the Department of Econometrics and Business Statistics, who is overseeing a group of undergraduate students on the project.

Although still in its very early stages, the results of this work will in future be used to assess players’ mental state during matches and attempt to map what effect emotional fluctuations might have had on the outcome.

But the outcome of this work could translate to sports analysts using facial recognition technology during real-time matches. And the project could even help inform how tech giants such as Google might continue to improve facial recognition technology into the future.

Facial recognition and emotions

The project began last year by building a training set of data, where faces from a large collection of video stills taken from the footage of the 2016 Australian Open were manually tagged and annotated.

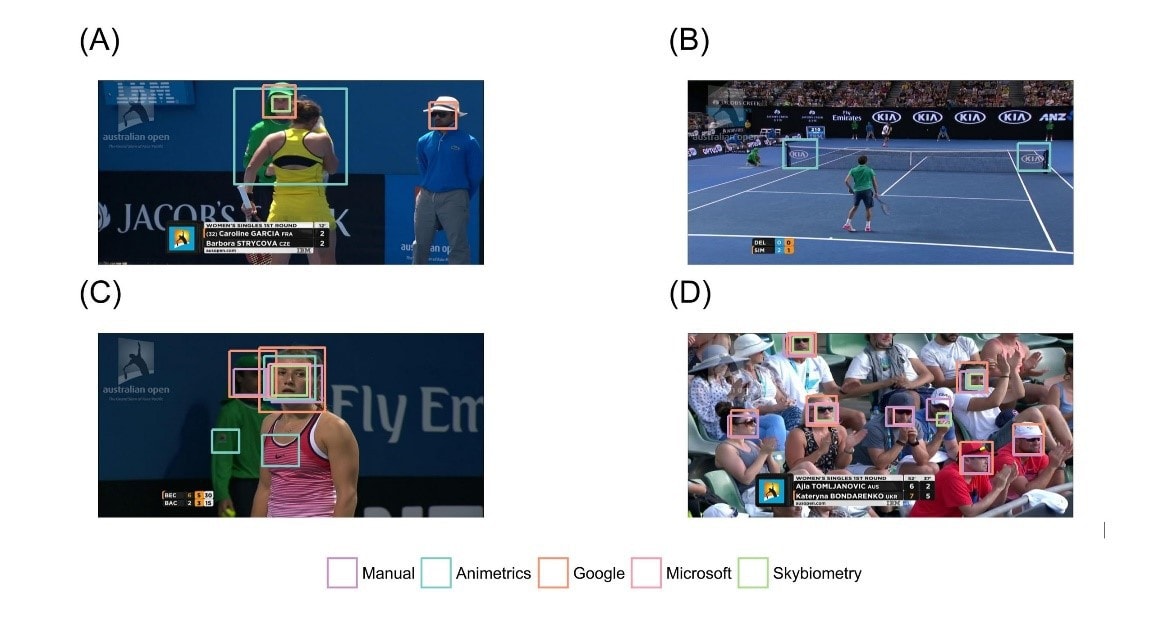

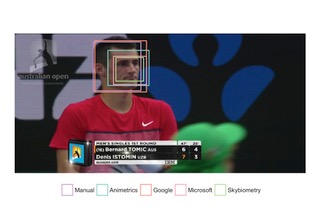

The next step has been evaluating the performance of several popular facial recognition software Application Programming Interfaces (APIs), from Google, Microsoft, Skybiometry and Animetrics, that are commonly used for recognising faces.

Almost all these APIs offer a suggestion on the emotional state of the face it recognises. But as one of the undergraduate students working with Professor Cook on the project points out, the work is stretching the boundaries of what this technology was originally designed to do.

“Most facial recognition software began with the intention of security applications,” Stephanie Kobakian explains. “There are others, such as Facebook, which links images of people with their profiles. This means the popular recognition software was not intended to be used in a fast-paced, elite sports environment.”

Professor Cook agrees it is quite difficult to read the right emotional state for players. The APIs report common emotions like happiness, sadness, anger and disgust, as well as a ‘neutral’ state.

For the most part, researchers found the players were being reported as ‘neutral’, or with just one emotion. This perhaps isn’t a surprise or even a limitation of the technology – most players would be attempting to mask their emotional state from their opposition.

“Sports emotions are different because they are trying not to give away what they are feeling. It’s very much individual-based, so they (the researchers) looked at getting a baseline for individuals and getting some subtle clues,” Professor Cook says.

Quirks and foibles

Apart from these natural challenges, there was a significant technical aspect to the project that included the construction of a web app of training data containing 6,500 images, manually tagged as faces to build a benchmark, says Professor Cook.

“It allowed the annotating of faces with additional information such as the angle of the camera, the background and the situation displayed on (the official broadcaster) Network Seven’s broadcasts at the time the face was captured,” she says.

If a face was visible in the still shot and considered “reasonable” for an API to detect, it was highlighted in the image. Then the APIs were evaluated on their success rate at detecting the faces.

The research revealed quirks in the software. For instance, some applications more than others tended to pick out the faces in the crowd behind the players, or incorrectly identified shirts, nets and other body parts – or even logos on the net and wall – as faces. Others were tricked by the appearance of shadows, resulting in incorrect detections.

When an area was selected, the application displayed a group of questions to be answered regarding that specific face. This allowed features to be recorded, such as the accessories worn, the amount of shade on the face, and whether the face was that of a player, staff member or crowd member.

In the end, the team found that Google surpassed the other APIs by a factor of 2:1. “Considering the images used during our study were stills derived from broadcast video files, it would be useful to extend further research to deal with the video files directly,” Professor Cook says.

While the parameters of their research has been about locating the right face recognition software for ongoing research, Professor Cook says it may have broader applications outside of tennis.

“It really is what a lot of sports analysts are looking for right now and it could be used across other individual sports,” she says.

“This really is just the beginning, I think it will explode in terms of the number of resources that will be involved as more groups like Tennis Australia become aware of the impact it can have in assessing the outcome of a game.”